Is an Executive Order on AI Enough?

November 27, 2023Feature Article(Source)

President Biden’s Executive Order 14110

President Biden signed an executive order on October 30, 2023 about the safe development of AI. However, this is not the first AI-related guidance this administration has published. They previously issued a Blueprint for an AI Bill of Rights and an AI Risk Management Framework. But both the blueprint and framework suffer from the same fatal flaw: they were voluntary recommendations to follow, and thus unenforceable. The question is then, is this executive order any better?

The executive order begins by promising that the federal government will take many actions to protect against AI. One of the first promises is that the federal government will create a labeling mechanism so people know when content is generated. While that seems nice in theory, there is no practical way for the government to fulfill this promise. Many AI-generated content detectors already exist, such as GPTZero, Undetectable AI, and Sapling, however none of them are 100% accurate. I even tested this by putting this article in multiple detectors, and half of them detected AI content. A recent study by the University of Maryland also mathematically proved that as AI improves, it will become impossible to differentiate with certainty between AI-generated and human created content. Furthermore, AI-generated content detectors face issues of bias against non-native English speakers.

Some of the other promises include protecting data privacy, combating discriminatory use of AI, and investing in AI-related education and job training. While these goals are important, they are all promises to do something in the future. There is no definite plan or explanation on how the government will fulfill these promises. Instead, the order gives a variety of regulatory agencies and agency heads various deadlines to come up with a plan on how to fulfill these promises. And we have seen similar requirements on regulatory agencies from executive orders not being followed. For example, in 2019, Former President Trump signed an executive order about AI after which only the Department of Health and Human Services implemented a plan following the requirements of the executive order. So what makes President Biden’s executive order any more likely to be followed?

While the executive order makes a lot of promises, it also takes two important steps. The first is that all companies will be required to disclose the results of their safety tests on AI to the government. While some large companies had already voluntarily agreed to this, it is now a mandatory requirement for every company developing AI that could pose a risk to US health and safety. However, one issue with this requirement is that there is no guidance on what companies should do if their AI fails a safety test. If the AI is not open to the public to use, then it is clear that more development should be done. But can they nonetheless release it to the public? And if the AI is open to the public already, what does the company have to do? Do they have to take the AI down until it passes the safety tests? Do they have to put a disclaimer somewhere that the AI failed the safety tests? The lack of guidance is a recurring issue in this executive order.

The second important step is the establishment of a White House AI Council consisting of various Cabinet members and heads of regulatory agencies. A coordinated oversight body could be instrumental in ensuring that the federal government is fulfilling its promises. However, there is no information on what exactly this council will do. All the executive order says is that the council will coordinate the efforts of the different agencies across the federal government. But the efficacy of these efforts remains to be seen.

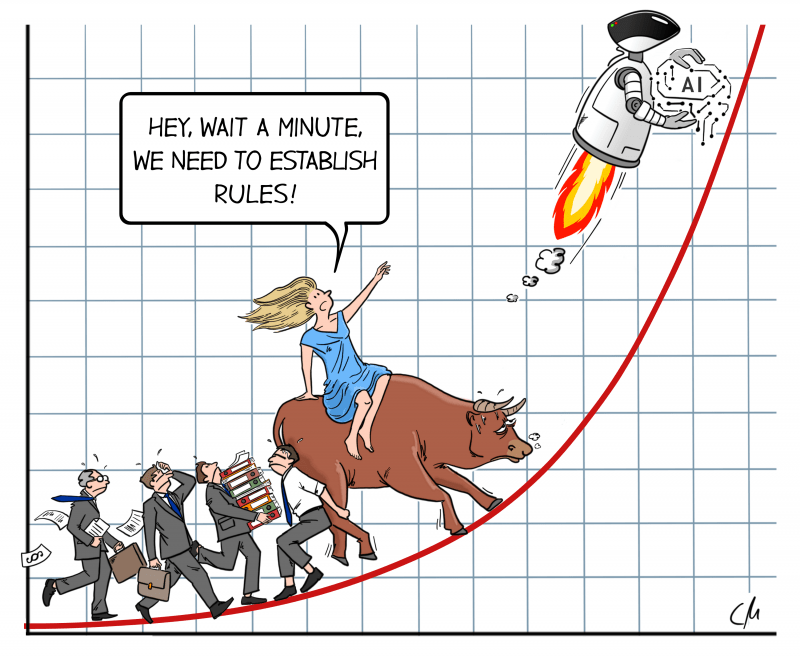

Going back to the original question, is this executive order any better? Only slightly. The executive order is weak. It makes a lot of promises without anything to back it up. And the steps it does take are not fully explained. There is no guarantee this will change anything. But it does start the process of regulating AI. So, what should the federal government do next?

The European Union AI Act

The EU has consistently been ahead of the US regarding technology regulations benefiting consumers. Last year, they passed regulation standardizing charging ports on all electronic devices, which is why Apple is once again changing their charging wire. And earlier this year, the European Parliament voted to regulate loot boxes in video games. Now, they are working on an exhaustive AI act that will regulate various kinds of AI.

The key part of this proposed regulation is dividing all AI into four different risk categories, based on the type and capabilities of each AI, and regulating them appropriately.

The first category is unacceptable risk. It would include AI with social scoring systems or systems that remotely monitor people in real time in public spaces. These AIs would have a blanket ban and be completely prohibited.

The second category is high risk. It would include AI falling under the EU’s product safety legislation, like medical devices, and AI used in eight specific fields, like biometric identification and law enforcement. These AIs would be heavily regulated, with obligations placed on both the creators and the users. Some requirements for AI in this category include establishing and maintaining a risk management system, ensuring human oversight, and using AI according to their proper instructions.

The third category is limited risk. It would include generative AI, chatbots, deepfakes, and some others. These AIs would have transparency obligations so users know they are interacting with AI or viewing deepfakes.

The fourth and final category is minimal or no risk. It includes all other AI, and they would be completely unregulated because of their low risk level.

Under this four-tier category system, the EU attempts to tailor regulations to match the capabilities and risks of different types of AI. Furthermore, once passed, this regulation would be enforceable against companies creating AI.

Is More Regulation the Right Answer?

Some critics may argue that regulating AI would stifle innovation and progress. But that argument neglects to recognize the important risks that AI poses. For one, AI is not trustworthy because it will make up facts. This has caused lawyers to accidentally use fake court cases when they asked AI a legal question. A second risk is that AI will expose confidential or personal information, especially when AI is trained using personal information from the web. Another risk is that AI will further bias and discrimination. This has already happened when Microsoft’s AI started spouting racist slurs. Without regulation, companies are free to create risky AI. But effective regulation would reduce these risks without stifling innovation, because you do not need to have an AI make up facts, expose personal information, and further bias to innovate.

Conclusion

As Will McAvoy, from HBO’s The Newsroom, once said, “The first step in solving any problem is recognizing there is one.” And President Biden’s executive order is clear recognition from the government that there is a problem: the US needs to start regulating AI. But it is only the first step. We need to follow the EU’s example. We need specific, enforceable legislation from Congress detailing different categories of AI and their requirements. Otherwise, the public will be left defenseless.

Suggested Citation: Akshat Shah, Is an Executive Order on AI Enough?, Cornell J.L. & Pub. Pol’y, The Issue Spotter (November 27, 2023), https://live-journal-of-law-and-public-policy.pantheonsite.io/is-an-executive-order-on-ai-enough/.

Akshat Shah is a second-year law student at Cornell Law School. He graduated from Rensselaer Polytechnic Institute with degrees in computer science and economics. Aside from his involvement with Cornell Law School’s Journal of Public Policy, Akshat is the co-vice president of the South Asian Law Student Association.

You may also like

- March 2025

- February 2025

- November 2024

- October 2024

- April 2024

- March 2024

- February 2024

- November 2023

- October 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- November 2019

- October 2019

- September 2019

- April 2019

- February 2019

- December 2018

- November 2018

- October 2018

- September 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- May 2017

- April 2017

- March 2017

- February 2017

- December 2016

- November 2016

- October 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- August 2014

- March 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- September 2013

- May 2013

- April 2013

- March 2013

- February 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- June 2012

- April 2012

- March 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- September 2011

- August 2011

- April 2011

- March 2011

- November 2010

- October 2010

- September 2010