Facial Recognition Software, Race, Gender Bias, and Policing

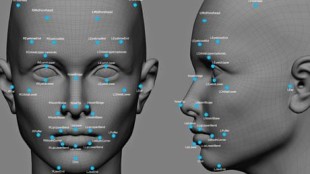

(Source) Facial Recognition Technology (FRT) identifies a person’s face by navigating through computer programs that access thousands of cameras worldwide to identify a suspect, criminal or fugitive. FRT could even accurately identify a person’s from a blurry captured image or instantaneously identify the subject among a crowd. This is the fantasy portrayed in Hollywood movies. In reality, facial recognition software is inaccurate, bias, and under-regulated. FRT creates a facial template of a person’s facial image and compares the template to millions of photographs stored in databases—driver’s license, mugshots, government records, or social media accounts. While this technology aims to accelerate law enforcement investigative work and more accurately identify crime suspects, it has been criticized for its bias against African-American Women. The arm of the United States government responsible for establishing standards for technology—National Institute For Standards and Technology (NIST)—conducted a test in July of 2019 that showed FRT’s bias against African-American women. NIST tested Idemia’s algorithms—an Australian company that provides facial recognition software to police in United States—for accuracy. The software mismatched white women’s faces once in 10,000, but it mismatched black women’s faces once in 1000 instances. This disparity is a result of the failure of current facial [read more]