“Smile! You’re on Camera” – The Implications of the Use of Facial Recognition Technology

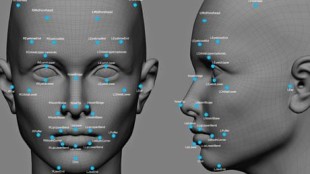

(Source) What is the first thing that comes to mind when you hear the phrase ‘facial recognition technology’? Is it a TV show or movie scene where law enforcement is staring at computer monitors as faces in a database cycle through as a software program looks for a match to an image of a suspect, victim, or witness in the case? Many associate the phrase ‘facial recognition technology’ with the government and law enforcement; an association which is reinforced by the way in which numerous procedural TV shows (such as FBI, Hawaii Five-0, Blue Bloods, and Law and Order: SVU) display facial recognition in their episodes. For many Americans, those TV and movie scenes are their primary exposure to facial recognition, resulting in the stronger association of facial recognition as a law enforcement aid. While facial recognition technology (also known as facial recognition or FRT) is certainly a tool used by government and law enforcement officials, its uses and capabilities span far beyond what is depicted by the entertainment industry. The concept of facial recognition originally began in the 1960s with a semi-automated system, which required an administrator to select facial features on a photograph before the software calculated and [read more]